当前位置:

X-MOL 学术

›

Mater. Today Phys.

›

论文详情

Our official English website, www.x-mol.net, welcomes your

feedback! (Note: you will need to create a separate account there.)

Improving machine-learning models in materials science through large datasets

Materials Today Physics ( IF 10.0 ) Pub Date : 2024-09-25 , DOI: 10.1016/j.mtphys.2024.101560 Jonathan Schmidt, Tiago F.T. Cerqueira, Aldo H. Romero, Antoine Loew, Fabian Jäger, Hai-Chen Wang, Silvana Botti, Miguel A.L. Marques

Materials Today Physics ( IF 10.0 ) Pub Date : 2024-09-25 , DOI: 10.1016/j.mtphys.2024.101560 Jonathan Schmidt, Tiago F.T. Cerqueira, Aldo H. Romero, Antoine Loew, Fabian Jäger, Hai-Chen Wang, Silvana Botti, Miguel A.L. Marques

|

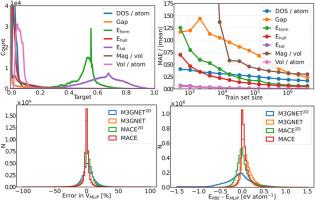

The accuracy of a machine learning model is limited by the quality and quantity of the data available for its training and validation. This problem is particularly challenging in materials science, where large, high-quality, and consistent datasets are scarce. Here we present alexandria , an open database of more than 5 million density-functional theory calculations for periodic three-, two-, and one-dimensional compounds. We use this data to train machine learning models to reproduce seven different properties using both composition-based models and crystal-graph neural networks. In the majority of cases, the error of the models decreases monotonically with the training data, although some graph networks seem to saturate for large training set sizes. Differences in the training can be correlated with the statistical distribution of the different properties. We also observe that graph-networks, that have access to detailed geometrical information, yield in general more accurate models than simple composition-based methods. Finally, we assess several universal machine learning interatomic potentials. Crystal geometries optimised with these force fields are very high quality, but unfortunately the accuracy of the energies is still lacking. Furthermore, we observe some instabilities for regions of chemical space that are undersampled in the training sets used for these models. This study highlights the potential of large-scale, high-quality datasets to improve machine learning models in materials science.

中文翻译:

通过大型数据集改进材料科学中的机器学习模型

机器学习模型的准确性受可用于其训练和验证的数据的质量和数量的限制。这个问题在材料科学中尤其具有挑战性,因为大型、高质量和一致的数据集很少。在这里,我们介绍了 alexandria,这是一个开放数据库,其中包含超过 500 万次周期性三维、二维和一维化合物的密度泛函理论计算。我们使用这些数据来训练机器学习模型,以使用基于成分的模型和晶体图神经网络来重现七种不同的属性。在大多数情况下,模型的误差会随着训练数据的增加而单调减小,尽管一些图形网络似乎会因较大的训练集大小而饱和。训练中的差异可以与不同属性的统计分布相关联。我们还观察到,可以访问详细几何信息的图网络通常比简单的基于组合的方法产生更准确的模型。最后,我们评估了几种通用的机器学习原子间电位。使用这些力场优化的晶体几何形状质量非常高,但不幸的是,能量的准确性仍然不足。此外,我们观察到在用于这些模型的训练集中采样不足的化学空间区域存在一些不稳定性。这项研究强调了大规模、高质量数据集在改进材料科学机器学习模型方面的潜力。

更新日期:2024-09-25

中文翻译:

通过大型数据集改进材料科学中的机器学习模型

机器学习模型的准确性受可用于其训练和验证的数据的质量和数量的限制。这个问题在材料科学中尤其具有挑战性,因为大型、高质量和一致的数据集很少。在这里,我们介绍了 alexandria,这是一个开放数据库,其中包含超过 500 万次周期性三维、二维和一维化合物的密度泛函理论计算。我们使用这些数据来训练机器学习模型,以使用基于成分的模型和晶体图神经网络来重现七种不同的属性。在大多数情况下,模型的误差会随着训练数据的增加而单调减小,尽管一些图形网络似乎会因较大的训练集大小而饱和。训练中的差异可以与不同属性的统计分布相关联。我们还观察到,可以访问详细几何信息的图网络通常比简单的基于组合的方法产生更准确的模型。最后,我们评估了几种通用的机器学习原子间电位。使用这些力场优化的晶体几何形状质量非常高,但不幸的是,能量的准确性仍然不足。此外,我们观察到在用于这些模型的训练集中采样不足的化学空间区域存在一些不稳定性。这项研究强调了大规模、高质量数据集在改进材料科学机器学习模型方面的潜力。

京公网安备 11010802027423号

京公网安备 11010802027423号