当前位置:

X-MOL 学术

›

J. Chem. Inf. Model.

›

论文详情

Our official English website, www.x-mol.net, welcomes your

feedback! (Note: you will need to create a separate account there.)

RLSynC: Offline–Online Reinforcement Learning for Synthon Completion

Journal of Chemical Information and Modeling ( IF 5.6 ) Pub Date : 2024-08-18 , DOI: 10.1021/acs.jcim.4c00554

Frazier N Baker 1 , Ziqi Chen 1 , Daniel Adu-Ampratwum 2 , Xia Ning 1, 2, 3, 4

Journal of Chemical Information and Modeling ( IF 5.6 ) Pub Date : 2024-08-18 , DOI: 10.1021/acs.jcim.4c00554

Frazier N Baker 1 , Ziqi Chen 1 , Daniel Adu-Ampratwum 2 , Xia Ning 1, 2, 3, 4

Affiliation

|

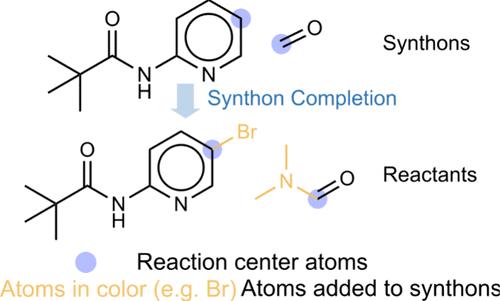

Retrosynthesis is the process of determining the set of reactant molecules that can react to form a desired product. Semitemplate-based retrosynthesis methods, which imitate the reverse logic of synthesis reactions, first predict the reaction centers in the products and then complete the resulting synthons back into reactants. We develop a new offline–online reinforcement learning method RLSynC for synthon completion in semitemplate-based methods. RLSynC assigns one agent to each synthon, all of which complete the synthons by conducting actions step by step in a synchronized fashion. RLSynC learns the policy from both offline training episodes and online interactions, which allows RLSynC to explore new reaction spaces. RLSynC uses a standalone forward synthesis model to evaluate the likelihood of the predicted reactants in synthesizing a product and thus guides the action search. Our results demonstrate that RLSynC can outperform state-of-the-art synthon completion methods with improvements as high as 14.9%, highlighting its potential in synthesis planning.

中文翻译:

RLSynC:用于 Synthon 补全的离线-在线强化学习

逆合成是确定可以反应形成所需产物的反应物分子组的过程。基于半模板的逆合成方法模拟合成反应的反向逻辑,首先预测产物中的反应中心,然后将得到的合成子完成回反应物。我们开发了一种新的离线-在线强化学习方法 RLSynC,用于在基于半模板的方法中完成合成子。RLSynC 为每个 synthon 分配一个代理,所有这些代理都通过以同步方式逐步执行操作来完成 synthon。RLSynC 从离线训练情节和在线交互中学习策略,这使得 RLSynC 能够探索新的反应空间。RLSynC 使用独立的正向合成模型来评估预测的反应物合成产物的可能性,从而指导动作搜索。我们的结果表明,RLSynC 的性能优于最先进的合成子完成方法,改进高达 14.9%,突出了其在合成规划中的潜力。

更新日期:2024-08-18

中文翻译:

RLSynC:用于 Synthon 补全的离线-在线强化学习

逆合成是确定可以反应形成所需产物的反应物分子组的过程。基于半模板的逆合成方法模拟合成反应的反向逻辑,首先预测产物中的反应中心,然后将得到的合成子完成回反应物。我们开发了一种新的离线-在线强化学习方法 RLSynC,用于在基于半模板的方法中完成合成子。RLSynC 为每个 synthon 分配一个代理,所有这些代理都通过以同步方式逐步执行操作来完成 synthon。RLSynC 从离线训练情节和在线交互中学习策略,这使得 RLSynC 能够探索新的反应空间。RLSynC 使用独立的正向合成模型来评估预测的反应物合成产物的可能性,从而指导动作搜索。我们的结果表明,RLSynC 的性能优于最先进的合成子完成方法,改进高达 14.9%,突出了其在合成规划中的潜力。

京公网安备 11010802027423号

京公网安备 11010802027423号