当前位置:

X-MOL 学术

›

Robot. Comput.-Integr. Manuf.

›

论文详情

Our official English website, www.x-mol.net, welcomes your feedback! (Note: you will need to create a separate account there.)

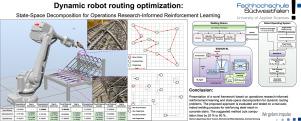

Dynamic robot routing optimization: State–space decomposition for operations research-informed reinforcement learning

Robotics and Computer-Integrated Manufacturing ( IF 9.1 ) Pub Date : 2024-06-25 , DOI: 10.1016/j.rcim.2024.102812 Marlon Löppenberg , Steve Yuwono , Mochammad Rizky Diprasetya , Andreas Schwung

Robotics and Computer-Integrated Manufacturing ( IF 9.1 ) Pub Date : 2024-06-25 , DOI: 10.1016/j.rcim.2024.102812 Marlon Löppenberg , Steve Yuwono , Mochammad Rizky Diprasetya , Andreas Schwung

|

There is a growing interest in implementing artificial intelligence for operations research in the industrial environment. While numerous classic operations research solvers ensure optimal solutions, they often struggle with real-time dynamic objectives and environments, such as dynamic routing problems, which require periodic algorithmic recalibration. To deal with dynamic environments, deep reinforcement learning has shown great potential with its capability as a self-learning and optimizing mechanism. However, the real-world applications of reinforcement learning are relatively limited due to lengthy training time and inefficiency in high-dimensional state spaces. In this study, we introduce two methods to enhance reinforcement learning for dynamic routing optimization. The first method involves transferring knowledge from classic operations research solvers to reinforcement learning during training, which accelerates exploration and reduces lengthy training time. The second method uses a state–space decomposer to transform the high-dimensional state space into a low-dimensional latent space, which allows the reinforcement learning agent to learn efficiently in the latent space. Lastly, we demonstrate the applicability of our approach in an industrial application of an automated welding process, where our approach identifies the shortest welding pathway of an industrial robotic arm to weld a set of dynamically changing target nodes, poses and sizes. The suggested method cuts computation time by 25% to 50% compared to classic routing algorithms.

中文翻译:

动态机器人路径优化:基于运筹学的强化学习的状态空间分解

人们对在工业环境中实施人工智能进行运筹学越来越感兴趣。虽然许多经典的运筹学求解器可确保最佳解决方案,但它们经常与实时动态目标和环境作斗争,例如需要定期算法重新校准的动态路由问题。为了应对动态环境,深度强化学习以其自学习和优化机制的能力显示出巨大的潜力。然而,由于训练时间长且高维状态空间效率低,强化学习的实际应用相对有限。在本研究中,我们介绍了两种增强动态路由优化强化学习的方法。第一种方法涉及在训练期间将知识从经典运筹学求解器转移到强化学习,从而加速探索并减少冗长的训练时间。第二种方法使用状态空间分解器将高维状态空间转换为低维潜在空间,这使得强化学习代理能够在潜在空间中高效学习。最后,我们展示了我们的方法在自动化焊接过程的工业应用中的适用性,其中我们的方法确定了工业机器人臂的最短焊接路径,以焊接一组动态变化的目标节点、姿势和尺寸。与经典路由算法相比,所建议的方法可将计算时间减少 25% 至 50%。

更新日期:2024-06-25

中文翻译:

动态机器人路径优化:基于运筹学的强化学习的状态空间分解

人们对在工业环境中实施人工智能进行运筹学越来越感兴趣。虽然许多经典的运筹学求解器可确保最佳解决方案,但它们经常与实时动态目标和环境作斗争,例如需要定期算法重新校准的动态路由问题。为了应对动态环境,深度强化学习以其自学习和优化机制的能力显示出巨大的潜力。然而,由于训练时间长且高维状态空间效率低,强化学习的实际应用相对有限。在本研究中,我们介绍了两种增强动态路由优化强化学习的方法。第一种方法涉及在训练期间将知识从经典运筹学求解器转移到强化学习,从而加速探索并减少冗长的训练时间。第二种方法使用状态空间分解器将高维状态空间转换为低维潜在空间,这使得强化学习代理能够在潜在空间中高效学习。最后,我们展示了我们的方法在自动化焊接过程的工业应用中的适用性,其中我们的方法确定了工业机器人臂的最短焊接路径,以焊接一组动态变化的目标节点、姿势和尺寸。与经典路由算法相比,所建议的方法可将计算时间减少 25% 至 50%。

京公网安备 11010802027423号

京公网安备 11010802027423号