当前位置:

X-MOL 学术

›

Med. Image Anal.

›

论文详情

Our official English website, www.x-mol.net, welcomes your

feedback! (Note: you will need to create a separate account there.)

A self-supervised spatio-temporal attention network for video-based 3D infant pose estimation

Medical Image Analysis ( IF 10.7 ) Pub Date : 2024-05-18 , DOI: 10.1016/j.media.2024.103208 Wang Yin 1 , Linxi Chen 2 , Xinrui Huang 3 , Chunling Huang 4 , Zhaohong Wang 4 , Yang Bian 5 , You Wan 6 , Yuan Zhou 7 , Tongyan Han 8 , Ming Yi 6

Medical Image Analysis ( IF 10.7 ) Pub Date : 2024-05-18 , DOI: 10.1016/j.media.2024.103208 Wang Yin 1 , Linxi Chen 2 , Xinrui Huang 3 , Chunling Huang 4 , Zhaohong Wang 4 , Yang Bian 5 , You Wan 6 , Yuan Zhou 7 , Tongyan Han 8 , Ming Yi 6

Affiliation

|

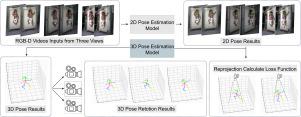

General movement and pose assessment of infants is crucial for the early detection of cerebral palsy (CP). Nevertheless, most human pose estimation methods, in 2D or 3D, focus on adults due to the lack of large datasets and pose annotations on infants. To solve these problems, here we present a model known as YOLO-infantPose, which has been fine-tuned, for infant pose estimation in 2D. We further propose a self-supervised model called STAPose3D for 3D infant pose estimation based on videos. We employ multi-view video data during the training process as a strategy to address the challenge posed by the absence of 3D pose annotations. STAPose3D combines temporal convolution, temporal attention, and graph attention to jointly learn spatio-temporal features of infant pose. Our methods are summarized into two stages: applying YOLO-infantPose on input videos, followed by lifting these 2D poses along with respective confidences for every joint to 3D. The employment of the best-performing 2D detector in the first stage significantly improves the precision of 3D pose estimation. We reveal that fine-tuned YOLO-infantPose outperforms other models tested on our clinical dataset as well as two public datasets MINI-RGBD and YouTube-Infant dataset. Results from our infant movement video dataset demonstrate that STAPose3D effectively comprehends the spatio-temporal features among different views and significantly improves the performance of 3D infant pose estimation in videos. Finally, we explore the clinical application of our method for general movement assessment (GMA) in a clinical dataset annotated as normal writhing movements or abnormal monotonic movements according to the GMA standards. We show that the 3D pose estimation results produced by our STAPose3D model significantly boost the GMA prediction performance than 2D pose estimation. Our code is available at .

中文翻译:

用于基于视频的 3D 婴儿姿势估计的自监督时空注意力网络

婴儿的一般运动和姿势评估对于早期发现脑瘫(CP)至关重要。然而,由于缺乏婴儿的大型数据集和姿势注释,大多数 2D 或 3D 人体姿势估计方法都专注于成人。为了解决这些问题,我们在这里提出了一个名为 YOLO-infantPose 的模型,该模型已经过微调,用于 2D 婴儿姿势估计。我们进一步提出了一种名为 STAPose3D 的自监督模型,用于基于视频的 3D 婴儿姿势估计。我们在训练过程中采用多视图视频数据作为解决缺乏 3D 姿势注释所带来的挑战的策略。 STAPose3D 结合了时间卷积、时间注意力和图注意力来共同学习婴儿姿势的时空特征。我们的方法概括为两个阶段:在输入视频上应用 YOLO-infantPose,然后将这些 2D 姿势以及每个关节各自的置信度提升到 3D。在第一阶段使用性能最好的 2D 检测器显着提高了 3D 位姿估计的精度。我们发现,经过微调的 YOLO-infantPose 优于在我们的临床数据集以及两个公共数据集 MINI-RGBD 和 YouTube-Infant 数据集上测试的其他模型。我们的婴儿运动视频数据集的结果表明,STAPose3D 有效地理解了不同视图之间的时空特征,并显着提高了视频中 3D 婴儿姿势估计的性能。最后,我们探索了我们的一般运动评估(GMA)方法在根据 GMA 标准注释为正常扭体运动或异常单调运动的临床数据集中的临床应用。 我们表明,STAPose3D 模型产生的 3D 姿态估计结果比 2D 姿态估计显着提高了 GMA 预测性能。我们的代码可在 .

更新日期:2024-05-18

中文翻译:

用于基于视频的 3D 婴儿姿势估计的自监督时空注意力网络

婴儿的一般运动和姿势评估对于早期发现脑瘫(CP)至关重要。然而,由于缺乏婴儿的大型数据集和姿势注释,大多数 2D 或 3D 人体姿势估计方法都专注于成人。为了解决这些问题,我们在这里提出了一个名为 YOLO-infantPose 的模型,该模型已经过微调,用于 2D 婴儿姿势估计。我们进一步提出了一种名为 STAPose3D 的自监督模型,用于基于视频的 3D 婴儿姿势估计。我们在训练过程中采用多视图视频数据作为解决缺乏 3D 姿势注释所带来的挑战的策略。 STAPose3D 结合了时间卷积、时间注意力和图注意力来共同学习婴儿姿势的时空特征。我们的方法概括为两个阶段:在输入视频上应用 YOLO-infantPose,然后将这些 2D 姿势以及每个关节各自的置信度提升到 3D。在第一阶段使用性能最好的 2D 检测器显着提高了 3D 位姿估计的精度。我们发现,经过微调的 YOLO-infantPose 优于在我们的临床数据集以及两个公共数据集 MINI-RGBD 和 YouTube-Infant 数据集上测试的其他模型。我们的婴儿运动视频数据集的结果表明,STAPose3D 有效地理解了不同视图之间的时空特征,并显着提高了视频中 3D 婴儿姿势估计的性能。最后,我们探索了我们的一般运动评估(GMA)方法在根据 GMA 标准注释为正常扭体运动或异常单调运动的临床数据集中的临床应用。 我们表明,STAPose3D 模型产生的 3D 姿态估计结果比 2D 姿态估计显着提高了 GMA 预测性能。我们的代码可在 .

京公网安备 11010802027423号

京公网安备 11010802027423号