当前位置:

X-MOL 学术

›

J. Chem. Theory Comput.

›

论文详情

Our official English website, www.x-mol.net, welcomes your

feedback! (Note: you will need to create a separate account there.)

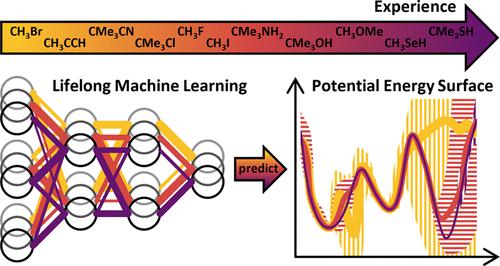

Lifelong Machine Learning Potentials

Journal of Chemical Theory and Computation ( IF 5.7 ) Pub Date : 2023-06-08 , DOI: 10.1021/acs.jctc.3c00279 Marco Eckhoff 1 , Markus Reiher 1

Journal of Chemical Theory and Computation ( IF 5.7 ) Pub Date : 2023-06-08 , DOI: 10.1021/acs.jctc.3c00279 Marco Eckhoff 1 , Markus Reiher 1

Affiliation

|

Machine learning potentials (MLPs) trained on accurate quantum chemical data can retain the high accuracy, while inflicting little computational demands. On the downside, they need to be trained for each individual system. In recent years, a vast number of MLPs have been trained from scratch because learning additional data typically requires retraining on all data to not forget previously acquired knowledge. Additionally, most common structural descriptors of MLPs cannot represent efficiently a large number of different chemical elements. In this work, we tackle these problems by introducing element-embracing atom-centered symmetry functions (eeACSFs), which combine structural properties and element information from the periodic table. These eeACSFs are key for our development of a lifelong machine learning potential (lMLP). Uncertainty quantification can be exploited to transgress a fixed, pretrained MLP to arrive at a continuously adapting lMLP, because a predefined level of accuracy can be ensured. To extend the applicability of an lMLP to new systems, we apply continual learning strategies to enable autonomous and on-the-fly training on a continuous stream of new data. For the training of deep neural networks, we propose the continual resilient (CoRe) optimizer and incremental learning strategies relying on rehearsal of data, regularization of parameters, and the architecture of the model.

中文翻译:

终生机器学习潜力

在准确的量子化学数据上训练的机器学习势(MLP)可以保持高精度,同时几乎不需要计算。不利的一面是,他们需要针对每个单独的系统进行培训。近年来,大量 MLP 都是从头开始训练的,因为学习额外的数据通常需要对所有数据进行重新训练,以免忘记之前获得的知识。此外,MLP 最常见的结构描述符无法有效地表示大量不同的化学元素。在这项工作中,我们通过引入元素包围原子中心对称函数(eeACSF)来解决这些问题,该函数结合了结构特性和元素周期表中的元素信息。这些 eeACSF 是我们开发终身机器学习潜力 (lMLP) 的关键。可以利用不确定性量化来超越固定的、预先训练的 MLP,以达到持续适应的 IMLP,因为可以确保预定义的精度水平。为了将 lMLP 的适用性扩展到新系统,我们应用持续学习策略来实现对连续新数据流的自主和动态训练。对于深度神经网络的训练,我们提出了依赖于数据排练、参数正则化和模型架构的持续弹性(CoRe)优化器和增量学习策略。

更新日期:2023-06-08

中文翻译:

终生机器学习潜力

在准确的量子化学数据上训练的机器学习势(MLP)可以保持高精度,同时几乎不需要计算。不利的一面是,他们需要针对每个单独的系统进行培训。近年来,大量 MLP 都是从头开始训练的,因为学习额外的数据通常需要对所有数据进行重新训练,以免忘记之前获得的知识。此外,MLP 最常见的结构描述符无法有效地表示大量不同的化学元素。在这项工作中,我们通过引入元素包围原子中心对称函数(eeACSF)来解决这些问题,该函数结合了结构特性和元素周期表中的元素信息。这些 eeACSF 是我们开发终身机器学习潜力 (lMLP) 的关键。可以利用不确定性量化来超越固定的、预先训练的 MLP,以达到持续适应的 IMLP,因为可以确保预定义的精度水平。为了将 lMLP 的适用性扩展到新系统,我们应用持续学习策略来实现对连续新数据流的自主和动态训练。对于深度神经网络的训练,我们提出了依赖于数据排练、参数正则化和模型架构的持续弹性(CoRe)优化器和增量学习策略。

京公网安备 11010802027423号

京公网安备 11010802027423号