Journal of Advanced Research ( IF 11.4 ) Pub Date : 2023-01-20 , DOI: 10.1016/j.jare.2023.01.014 Lemin Peng 1 , Zhennao Cai 1 , Ali Asghar Heidari 2 , Lejun Zhang 3 , Huiling Chen 1

|

Introduction

The main feature selection methods include filter, wrapper-based, and embedded methods. Because of its characteristics, the wrapper method must include a swarm intelligence algorithm, and its performance in feature selection is closely related to the algorithm's quality. Therefore, it is essential to choose and design a suitable algorithm to improve the performance of the feature selection method based on the wrapper. Harris hawks optimization (HHO) is a superb optimization approach that has just been introduced. It has a high convergence rate and a powerful global search capability but it has an unsatisfactory optimization effect on high dimensional problems or complex problems. Therefore, we introduced a hierarchy to improve HHO's ability to deal with complex problems and feature selection.

Objectives

To make the algorithm obtain good accuracy with fewer features and run faster in feature selection, we improved HHO and named it EHHO. On 30 UCI datasets, the improved HHO (EHHO) can achieve very high classification accuracy with less running time and fewer features.

Methods

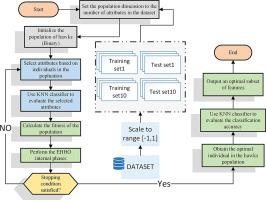

We first conducted extensive experiments on 23 classical benchmark functions and compared EHHO with many state-of-the-art metaheuristic algorithms. Then we transform EHHO into binary EHHO (bEHHO) through the conversion function and verify the algorithm's ability in feature extraction on 30 UCI data sets.

Results

Experiments on 23 benchmark functions show that EHHO has better convergence speed and minimum convergence than other peers. At the same time, compared with HHO, EHHO can significantly improve the weakness of HHO in dealing with complex functions. Moreover, on 30 datasets in the UCI repository, the performance of bEHHO is better than other comparative optimization algorithms.

Conclusion

Compared with the original bHHO, bEHHO can achieve excellent classification accuracy with fewer features and is also better than bHHO in running time.

中文翻译:

用于特征选择的分层 Harris hawks 优化器

介绍

主要的特征选择方法包括过滤器、基于包装器和嵌入的方法。由于其特点,包装方法必须包含群体智能算法,其特征选择的性能与算法的质量密切相关。因此,有必要选择和设计合适的算法来提高基于wrapper的特征选择方法的性能。Harris hawks optimization (HHO) 是刚刚介绍的一种出色的优化方法。它具有较高的收敛速度和强大的全局搜索能力,但对于高维问题或复杂问题的优化效果并不理想。因此,我们引入了层次结构来提高HHO处理复杂问题和特征选择的能力。

目标

为了使算法以更少的特征获得良好的精度,并且在特征选择上运行得更快,我们对HHO进行了改进,并将其命名为EHHO。在30个UCI数据集上,改进的HHO(EHHO)可以用更少的运行时间和更少的特征实现非常高的分类精度。

方法

我们首先对 23 个经典基准函数进行了广泛的实验,并将 EHHO 与许多最先进的元启发式算法进行了比较。然后通过转换函数将EHHO转换为二进制EHHO(bEHHO),并在30个UCI数据集上验证算法的特征提取能力。

结果

对 23 个基准函数的实验表明,EHHO 比其他同行具有更好的收敛速度和最小收敛性。同时,与HHO相比,EHHO可以显着改善HHO在处理复杂函数方面的弱点。此外,在 UCI 存储库中的 30 个数据集上,bEHHO 的性能优于其他对比优化算法。

结论

与原始bHHO相比,bEHHO可以用更少的特征实现优异的分类精度,并且在运行时间上也优于bHHO。

京公网安备 11010802027423号

京公网安备 11010802027423号