Computers in Biology and Medicine ( IF 7.0 ) Pub Date : 2022-10-12 , DOI: 10.1016/j.compbiomed.2022.106206 Haojia Wang 1 , Xicheng Chen 1 , Rui Yu 2 , Zeliang Wei 1 , Tianhua Yao 1 , Chengcheng Gao 1 , Yang Li 1 , Zhenyan Wang 1 , Dong Yi 1 , Yazhou Wu 1

|

Background

U-Net includes encoder, decoder and skip connection structures. It has become the benchmark network in medical image segmentation. However, the direct fusion of low-level and high-level convolution features with semantic gaps by traditional skip connections may lead to problems such as fuzzy generated feature maps and target region segmentation errors.

Objective

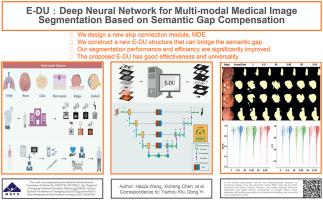

We use spatial enhancement filtering technology to compensate for the semantic gap and propose an enhanced dense U-Net (E-DU), aiming to apply it to multimodal medical image segmentation to improve the segmentation performance and efficiency.

Methods

Before combining encoder and decoder features, we replace the traditional skip connection with a multiscale denoise enhancement (MDE) module. The encoder features need to be deeply convolved by the spatial enhancement filter and then combined with the decoder features. We propose a simple and efficient deep full convolution network structure E-DU, which can not only fuse semantically various features but also denoise and enhance the feature map.

Results

We performed experiments on medical image segmentation datasets with seven image modalities and combined MDE with various baseline networks to perform ablation studies. E-DU achieved the best segmentation results on evaluation indicators such as DSC on the U-Net family, with DSC values of 97.78, 97.64, 95.31, 94.42, 94.93, 98.85, and 98.38 (%), respectively. The addition of the MDE module to the attention mechanism network improves segmentation performance and efficiency, reflecting its generalization performance. In comparison to advanced methods, our method is also competitive.

Conclusion

Our proposed MDE module has a good segmentation effect and operating efficiency, and it can be easily extended to multiple modal medical segmentation datasets. Our idea and method can achieve clinical multimodal medical image segmentation and make full use of image information to provide clinical decision support. It has great application value and promotion prospects.

中文翻译:

E-DU:基于语义间隙补偿的多模态医学图像分割深度神经网络

背景

U-Net 包括编码器、解码器和跳跃连接结构。它已成为医学图像分割中的基准网络。然而,传统的skip connections直接融合低层和高层卷积特征与语义间隙可能会导致生成的特征图模糊、目标区域分割错误等问题。

客观的

我们使用空间增强滤波技术来弥补语义差距,并提出增强型密集 U-Net (E-DU),旨在将其应用于多模态医学图像分割,以提高分割性能和效率。

方法

在组合编码器和解码器功能之前,我们用多尺度降噪增强 (MDE) 模块替换了传统的跳跃连接。编码器特征需要通过空间增强滤波器进行深度卷积,然后与解码器特征结合。我们提出了一种简单高效的深度全卷积网络结构E-DU,它不仅可以在语义上融合各种特征,还可以对特征图进行去噪和增强。

结果

我们对具有七种图像模态的医学图像分割数据集进行了实验,并将 MDE 与各种基线网络相结合以进行消融研究。E-DU在U-Net家族上DSC等评价指标上取得了最好的分割结果,DSC值分别为97.78、97.64、95.31、94.42、94.93、98.85和98.38(%)。在注意力机制网络中加入MDE模块提高了分割性能和效率,体现了其泛化性能。与先进的方法相比,我们的方法也具有竞争力。

结论

我们提出的 MDE 模块具有良好的分割效果和运行效率,并且可以轻松扩展到多模态医学分割数据集。我们的思路和方法可以实现临床多模态医学图像分割,充分利用图像信息为临床决策提供支持。具有很大的应用价值和推广前景。

京公网安备 11010802027423号

京公网安备 11010802027423号